In part I of this series, we took a look at creating Docker images and running Containers for Node.js applications. We also took a look at setting up a database in a container and how volumes and network play a part in setting up your local development environment.

- Docker Install Mongodb Container

- Install Mongodb Docker Ubuntu

- Install Docker Mongodb Tutorial

- Install Docker Mongodb Ubuntu

- Install Mongodb Docker Container

- Install Docker Mongodb Command

- Install Docker Mongodb Server

In this article, we will show you how to install Docker CE (Community Edition), create and run Docker containers on Ubuntu distribution. Installing Docker CE (Community Edition) in Ubuntu. To install Docker CE, first, you need to remove older versions of Docker were called docker, docker.io, or docker-engine from the system using the. Install MongoDB Community Edition¶. These documents provide instructions to install MongoDB Community Edition. Install on Linux Install MongoDB Community Edition and required dependencies on Linux.

In this article we'll take a look at creating and running a development image where we can compile, add modules and debug our application all inside of a container. This helps speed up the developer setup time when moving to a new application or project.

We'll also take a quick look at using Docker Compose to help streamline the processes of setting up and running a full microservices application locally on your development machine.

- Docker installed on your development machine. You can download and install Docker Desktop from the links below:

- Sign-up for a Docker ID

- Git installed on your development machine.

- An IDE or text editor to use for editing files. I would recommend VSCode

The first thing we want to do is download the code to our local development machine. Let's do this using the following git command:

git clone git@github.com:pmckeetx/memphis.git

Now that we have the code local, let's take a look at the project structure. Open the code in your favorite IDE and expand the root level directories. You'll see the following file structure.

The application is made up of a couple simple microservices and a front-end written in React.js. It uses MongoDB as it's datastore.

In part I of this series, we created a couple of Dockerfiles for our services and also took a look at running them in containers and connecting them to an instance of MongoDb running in a container.

There are many ways to use Docker and containers to do local development and a lot of it depends on your application structure. We'll start at with the very basic and then progress into more complicated setups

One of the easiest ways to start using containers in your development workflow is to use a development image. A development image is an image that has all the tools that you need to develop and compile your application with.

In this article we are using node.js, so our image should have Node.js installed as well as npm or yarn. Let's create a development image that we can use to run our node.js application inside of.

Development Dockerfile

Create a local directory on your development machine that we can use as a working directory to save our Dockerfile and any other files that we'll need for our development image.

Create a Dockerfile in this folder and add the following commands.

We start off by using the node:12.18.3 official image. I've found that this image is fine for creating a development image. I like to add a couple of text editors to the image in case I want to quickly edit a file while inside the container.

We did not add an ENTRYPOINT or CMD to the Dockerfile because we will rely on the base image's ENTRYPOINT and we will override the CMD when we start the image.

Let's build our image.

And now we can run it.

You will be presented with a bash command prompt. Now, inside the container we can create a JavaScript file and run it with Node.js.

Run the following commands to test our image.

Nice. It appears that we have a working development image. We can now do everything that we would do in our normal bash terminal.

If you ran the above Docker command inside of the notes-service directory, then you will have access to the code inside of the container.

You can start the notes-service by simply navigating to the /code directory and running npm run start.

The notes-service project uses MongoDb as it's data store. If you remember from Part I of this series, we had to start the Mongo container manually and connect it to the same network that our notes-service is running on. We also had to create a couple of volumes so we could persist our data across restarts of our application and MongoDb.

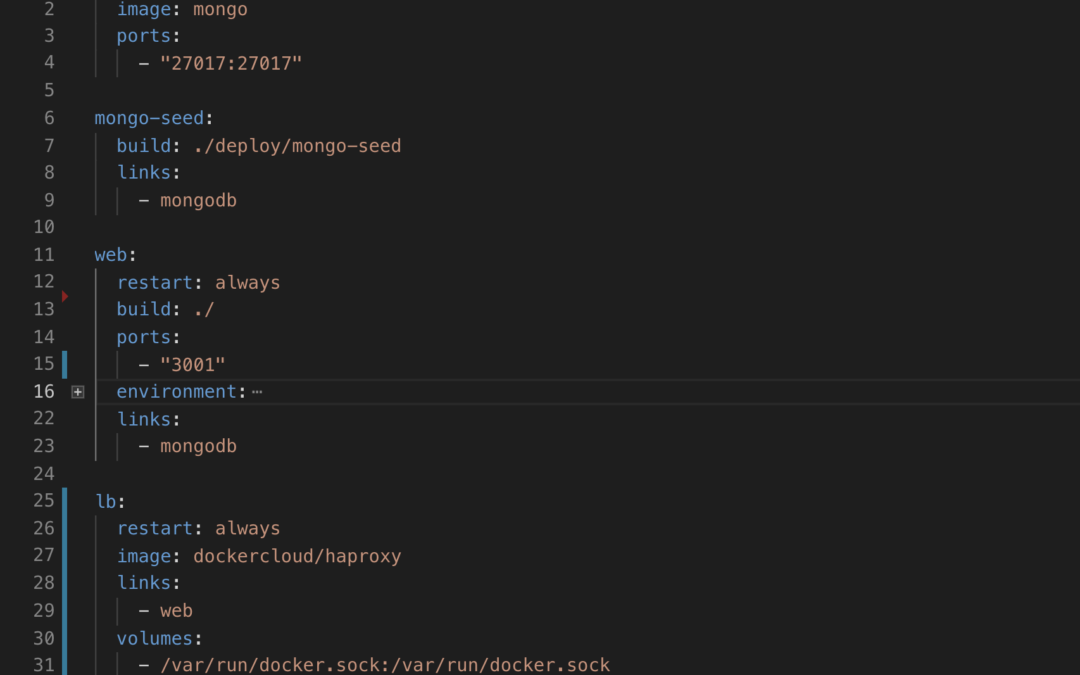

In this section, we'll create a Compose file to start our notes-serice and the MongoDb with one command. We'll also set up the Compose file to start the notes-serice in debug mode so that we can connect a debugger to the running node process.

Open the notes-service in your favorite IDE or text editor and create a new file named docker-compose.dev.yml. Copy and paste the the below commands into the file.

This compose file is super convenient because now we do not have to type all the parameters to pass to the docker run command. We can declaratively do that in the compose file.

We are exposing port 9229 so that we can attach a debugger. Download python for macos catalina. We are also mapping our local source code into the running container so that we can make changes in our text editor and have those changes picked up in the container.

One other really cool feature of using the a compose file, is that we have service resolution setup to use the service names. So we are now able to use 'mongo' in our connection string. The reason we use mongo is because that is what we have named our mongo service in the compose file as.

Let's start our application and confirm that it is running properly.

We pass the '–build' flag so Docker will compile our image and then start it.

If all goes will you should see something similar:

Now let's test our API endpoint. Run the following curl command:

You should receive the following response:

We'll use the debugger that comes with the Chrome browser. Open Chrome on your machine and then type the following into the address bar.

The following screen will open. Run wordpress on docker.

Click the 'Open dedicated DevTools for Node' link. This will open the DevTools that are connected to the running node.js process inside our container.

Docker Install Mongodb Container

Let's change the source code and then set a breakpoint.

Add the following code to the server.js file on line 19 and save the file.

If you take a look at the terminal where our compose application is running, you'll see that nodemon noticed the changes and reloaded our application.

Navigate back to the Chrome DevTools and set a breakpoint on line 20 and then run the following curl command to trigger the breakpoint.

💥 BOOM 💥 You should have seen the code break on line 20 and now you are able to use the debugger just like you would normally. You can inspect and watch variables, set conditional breakpoints, view stack traces and a bunch of other stuff.

In this article we took a look at creating a general development image that we can use pretty much like our normal command line. We also set up our compose file to map our source code into the running container and exposed the debugging port.

Resources

- Getting Started with Docker

- Best practices for writing Dockerfiles

- Docker Desktop

- Docker Compose

- Project skeleton samples

Docker is the defacto toolset for building modern applications and setting up a CI/CD pipeline – helping you build, ship and run your applications in containers on-prem and in the cloud.

Whether you're running on simple compute instances such as AWS EC2 or Azure VMs or something a little more fancy like a hosted Kubernetes service like AWS EKS or Azure AKS, Docker's toolset is your new BFF.

But what about your local development environment? Setting up local dev environments can be frustrating to say the least.

Remember the last time you joined a new development team?

You needed to configure your local machine, install development tools, pull repositories, fight through out-of-date onboarding docs and READMEs, get everything running and working locally without knowing a thing about the code and it's architecture. Oh and don't forget about databases, caching layers and message queues. These are notoriously hard to set up and develop on locally.

I've never worked at a place where we didn't expect at least a week or more of on-boarding for new developers.

So what are we to do? Well, there is no silver bullet and these things are hard to do (that's why you get paid the big bucks) but with the help of Docker and it's toolset, we can make things a whole lot easier.

In Part I of this tutorial we'll walk through setting up a local development environment for a relatively complex application that uses React for it's front end, Node and Express for a couple of micro-services and MongoDb for our datastore. We'll use Docker to build our images and Docker Compose to make everything a whole lot easier.

If you have any questions, comments or just want to connect. You can reach me in our Community Slack or on twitter at @pmckee.

Let's get started.

Prerequisites

To complete this tutorial, you will need:

- Docker installed on your development machine. You can download and install Docker Desktop from the links below:

- Sign-up for a Docker ID

- Git installed on your development machine.

- An IDE or text editor to use for editing files. I would recommend VSCode

Fork the Code Repository

The first thing we want to do is download the code to our local development machine. Let's do this using the following git command:

git clone git@github.com:pmckeetx/memphis.git

Create a Dockerfile in this folder and add the following commands.

We start off by using the node:12.18.3 official image. I've found that this image is fine for creating a development image. I like to add a couple of text editors to the image in case I want to quickly edit a file while inside the container.

We did not add an ENTRYPOINT or CMD to the Dockerfile because we will rely on the base image's ENTRYPOINT and we will override the CMD when we start the image.

Let's build our image.

And now we can run it.

You will be presented with a bash command prompt. Now, inside the container we can create a JavaScript file and run it with Node.js.

Run the following commands to test our image.

Nice. It appears that we have a working development image. We can now do everything that we would do in our normal bash terminal.

If you ran the above Docker command inside of the notes-service directory, then you will have access to the code inside of the container.

You can start the notes-service by simply navigating to the /code directory and running npm run start.

The notes-service project uses MongoDb as it's data store. If you remember from Part I of this series, we had to start the Mongo container manually and connect it to the same network that our notes-service is running on. We also had to create a couple of volumes so we could persist our data across restarts of our application and MongoDb.

In this section, we'll create a Compose file to start our notes-serice and the MongoDb with one command. We'll also set up the Compose file to start the notes-serice in debug mode so that we can connect a debugger to the running node process.

Open the notes-service in your favorite IDE or text editor and create a new file named docker-compose.dev.yml. Copy and paste the the below commands into the file.

This compose file is super convenient because now we do not have to type all the parameters to pass to the docker run command. We can declaratively do that in the compose file.

We are exposing port 9229 so that we can attach a debugger. Download python for macos catalina. We are also mapping our local source code into the running container so that we can make changes in our text editor and have those changes picked up in the container.

One other really cool feature of using the a compose file, is that we have service resolution setup to use the service names. So we are now able to use 'mongo' in our connection string. The reason we use mongo is because that is what we have named our mongo service in the compose file as.

Let's start our application and confirm that it is running properly.

We pass the '–build' flag so Docker will compile our image and then start it.

If all goes will you should see something similar:

Now let's test our API endpoint. Run the following curl command:

You should receive the following response:

We'll use the debugger that comes with the Chrome browser. Open Chrome on your machine and then type the following into the address bar.

The following screen will open. Run wordpress on docker.

Click the 'Open dedicated DevTools for Node' link. This will open the DevTools that are connected to the running node.js process inside our container.

Docker Install Mongodb Container

Let's change the source code and then set a breakpoint.

Add the following code to the server.js file on line 19 and save the file.

If you take a look at the terminal where our compose application is running, you'll see that nodemon noticed the changes and reloaded our application.

Navigate back to the Chrome DevTools and set a breakpoint on line 20 and then run the following curl command to trigger the breakpoint.

💥 BOOM 💥 You should have seen the code break on line 20 and now you are able to use the debugger just like you would normally. You can inspect and watch variables, set conditional breakpoints, view stack traces and a bunch of other stuff.

In this article we took a look at creating a general development image that we can use pretty much like our normal command line. We also set up our compose file to map our source code into the running container and exposed the debugging port.

Resources

- Getting Started with Docker

- Best practices for writing Dockerfiles

- Docker Desktop

- Docker Compose

- Project skeleton samples

Docker is the defacto toolset for building modern applications and setting up a CI/CD pipeline – helping you build, ship and run your applications in containers on-prem and in the cloud.

Whether you're running on simple compute instances such as AWS EC2 or Azure VMs or something a little more fancy like a hosted Kubernetes service like AWS EKS or Azure AKS, Docker's toolset is your new BFF.

But what about your local development environment? Setting up local dev environments can be frustrating to say the least.

Remember the last time you joined a new development team?

You needed to configure your local machine, install development tools, pull repositories, fight through out-of-date onboarding docs and READMEs, get everything running and working locally without knowing a thing about the code and it's architecture. Oh and don't forget about databases, caching layers and message queues. These are notoriously hard to set up and develop on locally.

I've never worked at a place where we didn't expect at least a week or more of on-boarding for new developers.

So what are we to do? Well, there is no silver bullet and these things are hard to do (that's why you get paid the big bucks) but with the help of Docker and it's toolset, we can make things a whole lot easier.

In Part I of this tutorial we'll walk through setting up a local development environment for a relatively complex application that uses React for it's front end, Node and Express for a couple of micro-services and MongoDb for our datastore. We'll use Docker to build our images and Docker Compose to make everything a whole lot easier.

If you have any questions, comments or just want to connect. You can reach me in our Community Slack or on twitter at @pmckee.

Let's get started.

Prerequisites

To complete this tutorial, you will need:

- Docker installed on your development machine. You can download and install Docker Desktop from the links below:

- Sign-up for a Docker ID

- Git installed on your development machine.

- An IDE or text editor to use for editing files. I would recommend VSCode

Fork the Code Repository

The first thing we want to do is download the code to our local development machine. Let's do this using the following git command:

git clone git@github.com:pmckeetx/memphis.git

Now that we have the code local, let's take a look at the project structure. Open the code in your favorite IDE and expand the root level directories. You'll see the following file structure.

├── docker-compose.yml

├── notes-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── reading-list-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── users-service

│ ├── Dockerfile

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

└── yoda-ui

├── README.md

├── node_modules

├── package.json

├── public

├── src

└── yarn.lock

The application is made up of a couple simple microservices and a front-end written in React.js. It uses MongoDB as it's datastore.

Typically at this point, we would start a local version of MongoDB or start looking through the project to find out where our applications will be looking for MongoDB.

Then we would start each of our microservices independently and then finally start the UI and hope that the default configuration just works.

This can be very complicated and frustrating. Especially if our micro-services are using different versions of node.js and are configured differently.

So let's walk through making this process easier by dockerizing our application and putting our database into a container.

Dockerizing Applications

Docker is a great way to provide consistent development environments. It will allow us to run each of our services and UI in a container. We'll also set up things so that we can develop locally and start our dependencies with one docker command.

The first thing we want to do is dockerize each of our applications. Let's start with the microservices because they are all written in node.js and we'll be able to use the same Dockerfile.

Create Dockerfiles

Create a Dockerfile in the notes-services directory and add the following commands.

This is a very basic Dockerfile to use with node.js. If you are not familiar with the commands, you can start with our getting started guide. Also take a look at our reference documentation.

Building Docker Images

Now that we've created our Dockerfile, let's build our image. Make sure you're still located in the notes-services directory and run the following command:

Install Mongodb Docker Ubuntu

docker build -t notes-service .

Install Docker Mongodb Tutorial

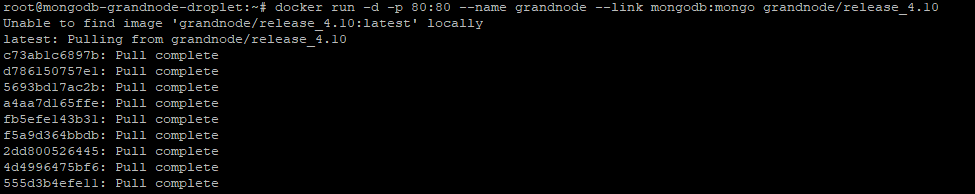

Now that we have our image built, let's run it as a container and test that it's working.

docker run --rm -p 8081:8081 --name notes notes-service

Looks like we have an issue connecting to the mongodb. Two things are broken at this point. We didn't provide a connection string to the application. The second is that we do not have MongoDB running locally.

At this point we could provide a connection string to a shared instance of our database but we want to be able to manage our database locally and not have to worry about messing up our colleagues' data they might be using to develop.

Install Docker Mongodb Ubuntu

Local Database and Containers

Instead of downloading MongoDB, installing, configuring and then running the Mongo database service. We can use the Docker Official Image for MongoDB and run it in a container.

Before we run MongoDB in a container, we want to create a couple of volumes that Docker can manage to store our persistent data and configuration. I like to use the managed volumes that docker provides instead of using bind mounts. You can read all about volumes in our documentation.

Let's create our volumes now. We'll create one for the data and one for configuration of MongoDB.

docker volume create mongodb

docker volume create mongodb_config

Now we'll create a network that our application and database will use to talk with each other. The network is called a user defined bridge network and gives us a nice DNS lookup service which we can use when creating our connection string.

docker network create mongodb

Now we can run MongoDB in a container and attach to the volumes and network we created above. Docker will pull the image from Hub and run it for you locally.

docker run -it --rm -d -v mongodb:/data/db -v mongodb_config:/data/configdb -p 27017:27017 --network mongodb --name mongodb mongo

Okay, now that we have a running mongodb, we also need to set a couple of environment variables so our application knows what port to listen on and what connection string to use to access the database. We'll do this right in the docker run command.

docker run

-it --rm -d

--network mongodb

--name notes

-p 8081:8081

-e SERVER_PORT=8081

-e SERVER_PORT=8081

-e DATABASE_CONNECTIONSTRING=mongodb://mongodb:27017/yoda_notes notes-service

Let's test that our application is connected to the database and is able to add a note.

Install Mongodb Docker Container

curl --request POST

--url http://localhost:8081/services/m/notes

--header 'content-type: application/json'

--data '{

'name': 'this is a note',

'text': 'this is a note that I wanted to take while I was working on writing a blog post.',

'owner': 'peter'

}

You should receive the following json back from our service.

{'code':'success','payload':{'_id':'5efd0a1552cd422b59d4f994','name':'this is a note','text':'this is a note that I wanted to take while I was working on writing a blog post.','owner':'peter','createDate':'2020-07-01T22:11:33.256Z'}}

Conclusion

Awesome! We've completed the first steps in Dockerizing our local development environment for Node.js.

Install Docker Mongodb Command

In Part II of the series, we'll take a look at how we can use Docker Compose to simplify the process we just went through.

Install Docker Mongodb Server

In the meantime, you can read more about networking, volumes and Dockerfile best practices with the below links: